We Saved Up To 39.7% On Our AWS Batch Bill

How controlled testing across instance families and regions delivered massive cost savings without pipeline rewrites

What we did

Average AWS Batch cost reduction

Planning insight: lower-cost regions

Stale/frozen pipelines identified

We Saved Up To 39.7% On Our AWS Batch Bill

In this case study

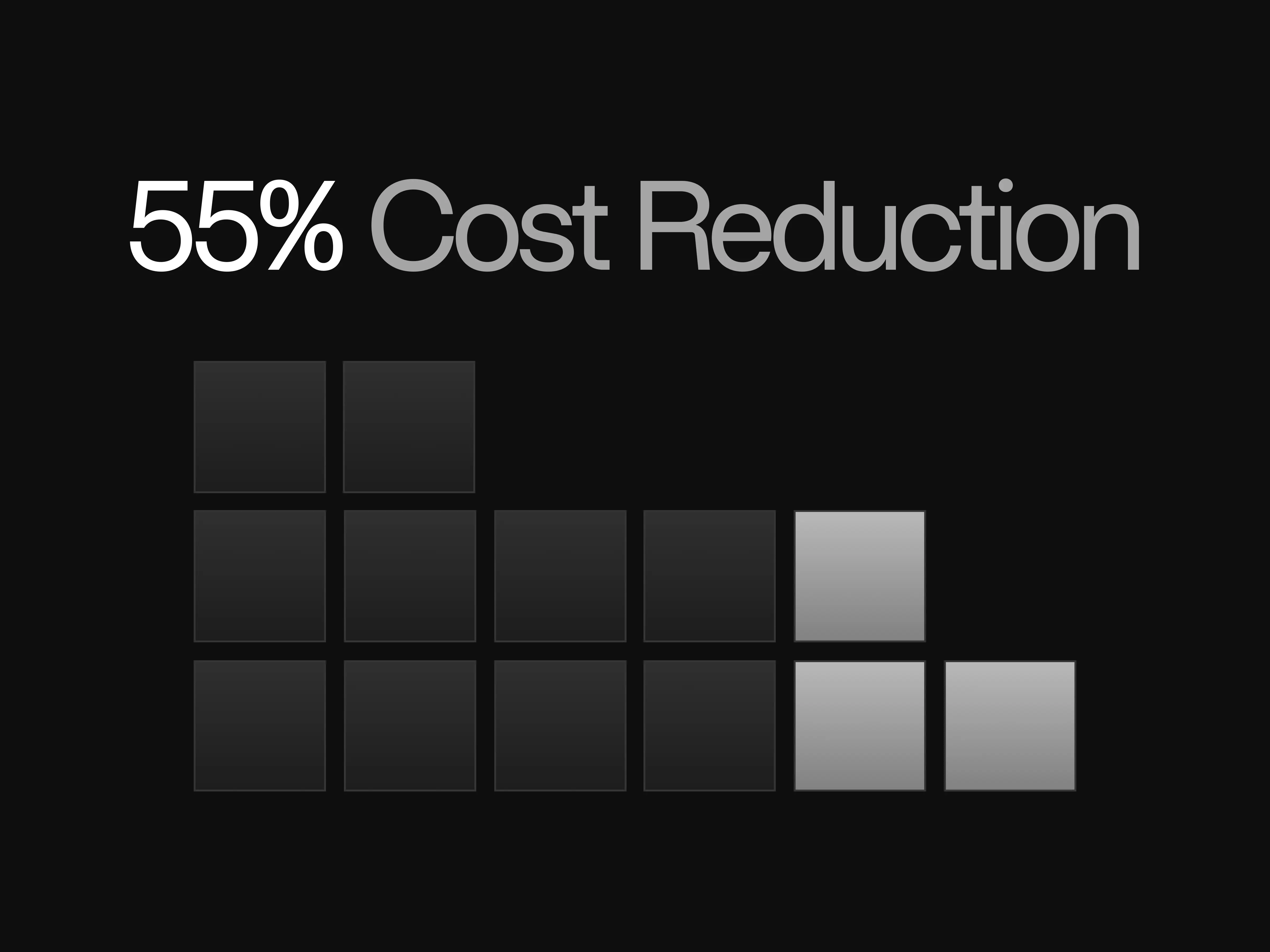

“We were able to consistently reach cost savings up to 39.7%, and as high as 55% in lower-cost regions. At enterprise scale, this equates to $250K–$300K in annual savings on a $1M AWS Batch spend, with potential to exceed $500K.”

Implementation

Overview

Bioinformatics teams rely on AWS Batch to run their workflows such as RNA-seq. However, with the default AWS Batch configuration, workloads may be scheduled on oversized or inefficient instances leading to unpredictable and inflated costs. Through controlled testing, Tracer demonstrated how replacing this default setup with a data-driven benchmarking approach can achieve consistent cost reductions of up to 39.7% on average, all without requiring any pipeline rewrites. We also noticed cost differences across regions, which can be useful for budgeting and guiding deployment decisions.

“Tracer can deliver direct, measurable savings of up to 39.7%. Additionally, thanks to our optimized region selection, savings could exceed 55%.” — Michele Verrielo, Principal Engineer at Tracer

The Challenge

Bioinformatics teams rely on AWS Batch to run their workflows such as RNA-seq. However, running these workloads across multiple instance families and regions often leads to unpredictable and inflated costs as AWS Batch automatically selects which instances to use.

Oversized instances burn unnecessary resources, region selection impacts runtime and cost efficiency and researchers spending time on experimenting configurations instead of science.

Therefore, organizations need a reliable, data-driven way to control AWS Batch spending without costly or risky pipeline rewrites.

At the same time, inefficiency is not limited to oversized instances. In high-compute workflows, pipelines can get stuck in infinite loops, hung threads or stalled processes. These frozen jobs keep burning compute resources and from the outside they appear active even though no progress is being made. Detecting these requires OS-level visibility to identify when jobs have stopped advancing, preventing large amounts of wasted spend.

Tracer detects stalled or frozen jobs at the OS level so teams can halt spend on non-progressing pipelines.

Internal Resolution

At Tracer, we ran controlled RNA-seq workloads on AWS Batch starting from the default AWS Batch configuration. We monitored the most significant cost and performance factors to understand where optimization was most beneficial.

We examined thoroughly on-demand instance families (c5, m5, r5, etc.) and performed numerous cost experiments to quantify where real savings could be made, to identify the best price/performance balance and validate the cost reductions without any pipeline rewrites.

In order to do this testing in a controlled environment, we configured compute environments to restrict allowed instance families so each run isolated one family at a time.

Furthermore, we ran controlled benchmarks across different instance strategies and included multi-region testing to show regional cost differences. We used our centralized observability platform to track costs and performance.

Measurable Impact

By replacing the default AWS Batch setup with our data-driven benchmarking approach, we consistently reduced up to 39.7% of costs across typical RNA-seq workloads.

Our analysis further showed that organizations could save significantly more if workloads were run in lower-cost regions, which is valuable as a budgeting and deployment consideration, even if workloads remain in the same location.

All of this was achieved without a single pipeline rewrite, making the optimization purely an infrastructure-level improvement. With the additional ease of automated benchmarking and recommendations by our Tracer AI agent, research teams can save both money and valuable time.

Takeaway

We were able to consistently reach cost savings up to 39.7% compared to the default AWS Batch configuration and observed how costs vary across regions.

At scale, this equates to $250K–$300K in annual savings on a $1M AWS Batch spend, with potential to exceed $500K.

Ultimately, these results came from infrastructure optimization, supported by Tracer's automated benchmarking and AI-driven recommendations. This allows researchers to spend less time tuning configurations and more time focusing on science.

“These results came from infrastructure optimization, supported by Tracer's automated benchmarking and AI-driven recommendations. This allows researchers to spend less time tuning configurations and more time focusing on science.”— Aliya Yermakhan, Head of Front End Engineering at Tracer